Language is our human superpower. Through words, we can delimit concepts in the huge space of thought, we can reach out to our fellow human being and build bridges of understanding and communication. We seem to be a very special species that developed or is simply provided by the ability to use language for communication. If you are human like me, you will probably agree to say that language is a complex faculty. Indeed, it is written, spoken, heard and read! There are different canals and perspectives that are inherent to language and it is at the very root of our understanding of the world – And He taught Adam the names - all of them. – Quran(2:31). There are many languages and they differ from one another. Our tongues have different colors and tones, and they can be divided into 42 families or categories, among them Indo-European languages (Romance, Slavic, Germanic), Semitic languages such as Arabic and Hebrew, etc.

Not all languages use an alphabet, really?

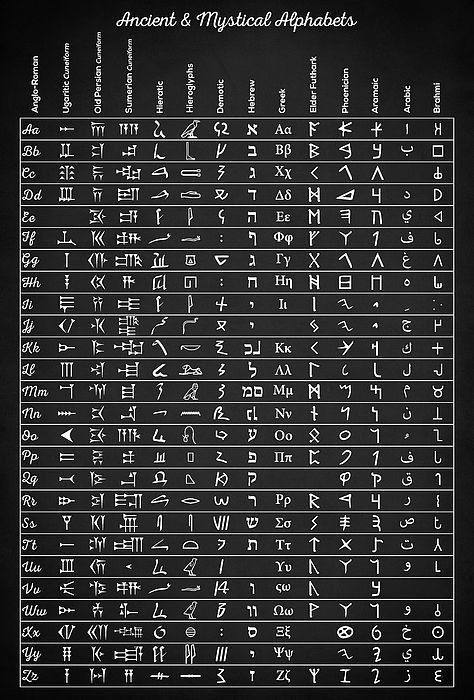

An alphabet is a writing system that consists of a set of symbols, such as letters, that represent the sounds or phonemes of a language. In french, the alphabet is composed of 26 letters, while in arabic it is 28 letters. The letters form words, the words make up sentences and sentences construct paragraph and the meaning emerges. Some languages use a different type of writing system, such as characters in Chinese, Japanese, and Korean or syllabic scripts in Cherokee, Inuktitut. Some languages, like Chinese, Japanese, and Korean, use a combination of logographic characters and syllabic scripts. While some languages, like spoken forms of some indigenous tribes, have no writing system at all. Despite all these differences, language seem to be a complex construct that is built from smaller pieces and parts like a body is made of molecules that are composed of atoms.

Natural Language Processing

Language is hard to process for a variety of reasons. One reason is that it is inherently ambiguous, meaning that a single sentence can have multiple possible meanings depending on context. Additionally, language is constantly evolving, making it difficult to keep up with new words and phrases. Furthermore, there are many exceptions to grammar rules, which can make it difficult to understand and generate correct sentences. Finally, understanding the intent behind a statement, or the tone in which it is spoken, can also be difficult.

Natural language processing (NLP) is a field of artificial intelligence (AI) that focuses on enabling computers to understand, interpret, and generate human language. NLP systems use a combination of machine learning algorithms and linguistic rules to analyze and understand natural language input, and to generate output in the form of text or synthesized speech.

There are several key components to natural language processing, including:

Tokenization

Tokenization is the process of breaking down a piece of text into smaller units called tokens. These tokens can be individual words, phrases, or other elements of language, depending on the level of granularity desired.

Tokenization is the process of breaking down a piece of text into smaller units called tokens. In natural language processing (NLP), tokenization is used to separate text into words, phrases, symbols, or other elements that can be analyzed and processed.

There are several approaches to tokenization, and the appropriate approach will depend on the specific needs of the NLP task at hand. Some common approaches include:

Word tokenization

This involves breaking a piece of text into individual words. This is a common approach for many NLP tasks, as words are often the smallest unit of meaning in a language.

import nltk

# Split a piece of text into words

text = "This is a sample piece of text to be tokenized."

words = nltk.word_tokenize(text)

print(words)

# Result

['This', 'is', 'a', 'sample', 'piece', 'of', 'text', 'to', 'be', 'tokenized', '.']

Sentence tokenization

This involves breaking a piece of text into individual sentences. This is useful for tasks such as language translation or text summarization, where it is important to understand the structure and meaning of a text at the level of individual sentences.

import nltk

# Split a piece of text into sentences

text = "This is the first sentence. This is the second sentence."

sentences = nltk.sent_tokenize(text)

print(sentences)

# Result

['This is the first sentence.', 'This is the second sentence.']

Subword tokenization

This involves breaking a piece of text into smaller units that are smaller than words, such as morphemes or character n-grams. This can be useful for tasks such as machine translation or text classification, where it is important to consider the relationships between subword units.

Tokenization is a fundamental preprocessing step in many NLP tasks, and it is typically the first step in the process of analyzing and understanding natural language text.

Part-of-speech tagging

Part-of-speech (POS) tagging involves identifying the role that each word plays in a sentence, such as whether it is a noun, verb, adjective, etc. This information is useful for understanding the meaning of a sentence and for determining the appropriate response to a request or command. Some common techniques for POS tagging include:

Rule-based tagging

This involves using a set of manually-defined rules to assign POS tags to words. This method relies heavily on the knowledge of the language expert.

import nltk

nltk.download('averaged_perceptron_tagger')

sentence = "I am learning NLP"

words = nltk.word_tokenize(sentence)

tagged_words = nltk.pos_tag(words)

print(tagged_words)

Statistical tagging

This method uses machine learning algorithms, such as Hidden Markov Models (HMMs) or Conditional Random Fields (CRFs), to automatically learn to assign POS tags based on examples of labeled text.

import nltk

nltk.download('brown')

from nltk.corpus import brown

from nltk.tag import HiddenMarkovModelTagger

# Train the HMM model

train_data = brown.tagged_sents(categories='news')

hmm_tagger = HiddenMarkovModelTagger.train(train_data)

# Use the trained model to tag a sentence

sentence = "I am learning NLP"

words = nltk.word_tokenize(sentence)

tagged_words = hmm_tagger.tag(words)

print(tagged_words)

Hybrid tagging

This is a combination of rule-based and statistical methods, where a set of hand-written rules are used to disambiguate the results of statistical models.

import nltk

from nltk.tag import RegexpTagger

from nltk.tag import UnigramTagger

from nltk.corpus import brown

# Define a set of regular expressions for matching POS patterns

patterns = [(r'^-?[0-9]+(.[0-9]+)?$', 'CD'), # numbers

(r'.*ness$', 'NN'), # nouns formed from adjectives

(r'.*ment$', 'NN'), # nouns formed from verbs

(r'.*ful$', 'JJ'), # adjectives

(r'.*', 'NN') # nouns (default)

]

# Create the RegexpTagger with the defined patterns

regexp_tagger = RegexpTagger(patterns)

# Create a backoff tagger using the UnigramTagger

uni_tagger = UnigramTagger(brown.tagged_sents(categories='news'))

hybrid_tagger = nltk.RegexpTagger(patterns, backoff=uni_tagger)

# Use the trained model to tag a sentence

sentence = "I am learning NLP"

words = nltk.word_tokenize(sentence)

tagged_words = hybrid_tagger.tag(words)

print(tagged_words)

Neural tagging

This uses neural networks to assign POS tags, usually with architectures like Recurrent Neural Networks (RNNs) or Transformers.

!pip install flair

from flair.models import SequenceTagger

# Load the pre-trained model

tagger = SequenceTagger.load('pos-fast')

# Use the model to tag a sentence

sentence = "I am learning NLP"

tagged_sentence = tagger.predict(sentence)

print(tagged_sentence)

Dictionary-based tagging

This method uses a dictionary of words and their corresponding POS tags to assign POS tags to words in a sentence.

import nltk

from nltk.tag import AffixTagger

from nltk.corpus import brown

# Create the AffixTagger

affix_tagger = AffixTagger(brown.

.

In the second part, we will create our own natural language processing tool and try out some cool stuff. Stay tuned.